Your Browsing History

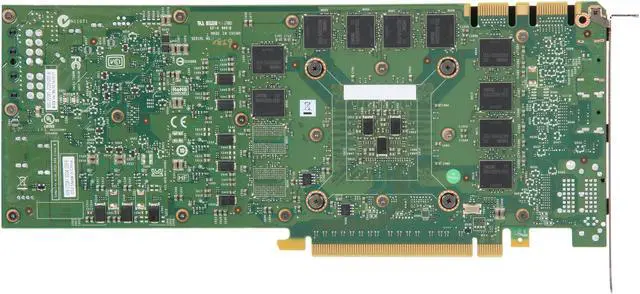

NVIDIA Tesla K-series GPU Accelerators are based on the NVIDIA Kepler compute architecture and powered by CUDA - the world’s most pervasive parallel computing model. The innovative Kepler compute architecture design includes innovative technologies like Dynamic parallelism and Hyper-Q for boosted computing performance, higher power efficiency and record application speeds. The Tesla K20 GPU Accelerator is designed to be the performance leader in double precision applications and broader supercomputing market with up to 1.17 teraflops peak double precision performance. Try the NVIDIA Tesla K20 GPU accelerator and speed up your application by up to 10X.

SMX designSMX (streaming multiprocessor) design delivers up to 3x more performance per watt compared to the SM in Fermi*. It also delivers one petaflop of computing in just ten server racks. (*Based on DGEMM performance: Tesla M2090 = 410 gigaflops, Tesla K20 (expected) > 1000 gigaflops)

SMX designSMX (streaming multiprocessor) design delivers up to 3x more performance per watt compared to the SM in Fermi*. It also delivers one petaflop of computing in just ten server racks. (*Based on DGEMM performance: Tesla M2090 = 410 gigaflops, Tesla K20 (expected) > 1000 gigaflops) Dynamic Parallelism capabilityDynamic Parallelism capability enables GPU threads to automatically spawn new threads. By adapting to the data without going back to the CPU, it greatly simplifies parallel programming. Plus it enables GPU acceleration of a broader set of popular algorithms, like adaptive mesh refinement (AMR), fast multipole method (FMM), and multigrid methods.

Dynamic Parallelism capabilityDynamic Parallelism capability enables GPU threads to automatically spawn new threads. By adapting to the data without going back to the CPU, it greatly simplifies parallel programming. Plus it enables GPU acceleration of a broader set of popular algorithms, like adaptive mesh refinement (AMR), fast multipole method (FMM), and multigrid methods. Hyper-QHyper-Q feature enables multiple CPU cores to simultaneously utilize the CUDA cores on a single Kepler GPU. This dramatically increases GPU utilization, slashes CPU idle times, and advances programmability—ideal for cluster applications that use MPI.

Hyper-QHyper-Q feature enables multiple CPU cores to simultaneously utilize the CUDA cores on a single Kepler GPU. This dramatically increases GPU utilization, slashes CPU idle times, and advances programmability—ideal for cluster applications that use MPI. ECC memory error protectionMeets a critical requirement for computing accuracy and reliability in data centers and supercomputing centers. Both external and internal memories are ECC protected in Tesla K20 and K20X.

ECC memory error protectionMeets a critical requirement for computing accuracy and reliability in data centers and supercomputing centers. Both external and internal memories are ECC protected in Tesla K20 and K20X. L1 and L2 cachesAccelerates algorithms such as physics solvers, ray-tracing, and sparse matrix multiplication where data addresses are not known beforehand.

L1 and L2 cachesAccelerates algorithms such as physics solvers, ray-tracing, and sparse matrix multiplication where data addresses are not known beforehand. Asynchronous transfer with dual DMA enginesTurbocharges system performance by transferring data over the PCIe bus while the computing cores are crunching other data.

Asynchronous transfer with dual DMA enginesTurbocharges system performance by transferring data over the PCIe bus while the computing cores are crunching other data.